And what effect could the monoculture they create have on our information supply?

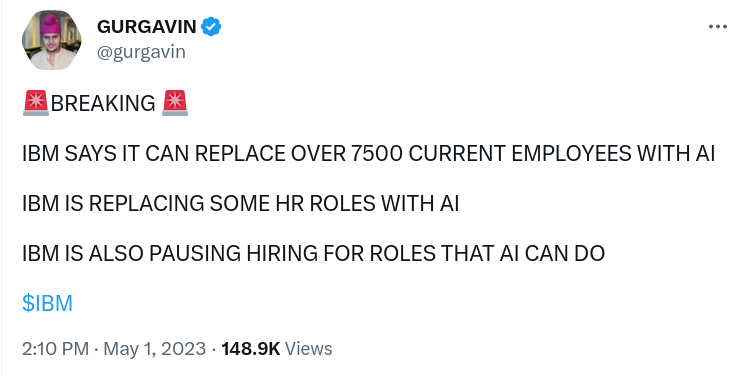

Our last episode kicked off with a tweet tweeted by Deep Dive recurring character Gurgavin, who yelled:

COMPANIES WILL USE THEM TO DO THE THINGS THEY PAY PEOPLE TO DO NOW

YOU CAN TELL IT’S SERIOUS BECAUSE I’M YELLING IT AT YOU UNDER A SIREN

We discussed this curious treatment of an assertion that IBM made to Bloomberg, and how its re-cycling through the media and content environment suits IBM just fine.

Those of us in the news content business are paying close attention to AI, because the ready-for-primetime applications like Chat GPT and Google Bard are “contributing” to our sphere more than any others. Bloomberg, for instance, has identified 49 websites spun up recently to pepper the world with AI-generated stories about whatever clicks.

Presumably, the bots monitor trending topics, search other news sites, forums, and social media for information regarding those topics, stitch it all together with “original” computer-generated prose that isn’t a copy-and-paste, so it isn’t plagiarism, and post stories.

Bloomberg’s main objection here appears to be a lack of fact-checking and editorial oversight. A reasonable complaint, but what was content-farm news’ excuse for shoddy work when it was using people to make these stories?

The Bots Are Now Generating Fake News at a 2014 Level

The mainstream news was in a similar twist in 2017, when the organic shares being generated by expanding social-media powerhouse Facebook made it commercial to throw up “news” websites that were invented yesterday, would only be around as long as they kept getting traffic, and ran stories built around sensational outright lies.

Following a 2016 Buzzfeed revelation that headlines about Hillary Clinton being arrested for treason and the Pope endorsing Donald Trump were being generated by Macedonian teenagers, who had found they traveled a lot better than most other fake news articles, the sudden “Fake News” epidemic was covered extensively by real news outlets. Those outlets were egg-faced about having given President Elect Trump no chance of beating Clinton, so they got pretty righteous about it.

The crusade was going strong for a while, reporters and editors having convinced themselves that it was something the public cared about by reading each others’ stories. But it came to a screeching halt the first time anyone brought it up with up with President Elect Donald Trump, who replied:

“Fake News? You’re Fake News!”

That bit of (accidental?) genius was an extension of a tone that had been set in the Trump campaign, and he was happy to bring it to the White House. The public hates corporate media, and can identify with a candidate who talks down to it. Jilted by a candidate who “hated” them so much that he’s been thirstily chasing them for several decades, the press obsessed itself with ruining Trump, and jammed the channels with high-effort attempts at embarrassing him. Trying to shame a man who can’t be shamed amounted to pumping oxygen into the inferno, and the rest is history.

In that moment, Trump showed us why AI-generated work meant to be consumed by humans will never really stick: it isn’t human. And it can’t be.

The Macedonian kids got called “Russian bots” a lot by the press, but there’s no evidence that the stories weren’t written by real people. The 140 websites that Buzzfeed identified all had names like TheRightists.com, BVAnews.com, and WorldPoliticus.com, and weren’t taken seriously by anyone.

Similarly, the 49 news sites that Bloomberg has identified as hosting actual bot-generated content don’t appear to be anything that anyone has ever heard of. The bots that man the sites have probably done many hundreds of thousands of hours more reading than Trump ever has or will. They’ve probably even watched more television than he has, and can certainly process and respond quicker. But could they ever come up with a reply line to a reporter like “Fake News? You’re fake news!” and make it count?

Even if someone wrote and refined a pithiness subroutine, an AI bot could never execute the aim and timing necessary to deliver such a deadly strike, or have the wherewithal to just drop the mic and ignore future inquiries from the reporter who had just been totally and irreparably owned. No amount of knowledge can be assembled to create that kind of performance. It takes understanding. And computers don’t understand. They just take instructions and create output.

Chat bots aren’t reporters, they’re information aggregators. They aren’t writers, either. They’re mimics.

The stories they generate don’t have any juice, because they aren’t human. They can’t think around corners and anticipate how a human reader might feel about the story, what they’d want out of it, how it could be more interesting or funny. The bots, and their handlers, are doing the same thing as Gurgavin: “Look! Information! We’re informing you!”

To the extent that the audience for such content generates a volume of clicks that advertisers are willing to buy, content farming is commercial activity. Google parent-co Alphabet, effectively one of the world’s largest advertising businesses, is in a position to approve or disapprove websites that use its AI products to generate news stories as ad distributors, and determine how they ought to be treated by search-ranking algorithms.

The company’s comment to Bloomberg make it sound like it’s got safeguards against letting bot content take over the infosphere, but one might expect that those policies will change according to what’s best for Google’s top and bottom lines. Fortunately, this has all the makings of a self-regulating process.

But can’t the bot content sites just be used to advertise to other bots?

With such a low barrier to entry (anything special about AI tools? Not really, right? Basically over-built search engines that anyone can use?), it’s no wonder Bloomberg found 49 websites full of bot stories without looking too hard.

Until these bots are getting people to subscribe and come back, generating some fan mail or hate mail, there is clearly a saturation point for this model, where the advertising money has extended itself across as many low grade clicks as it cares to.

Since AI “writers” are aggregators and regurgitators of information, instead of understanders and contextualizers of information, rapid degradation is always looming just beyond the limit of fresh input. The AI reads other stories to generate its stories. What happens when THOSE stories are AI-generated?

There is no way for a system in a loop to maintain critical mass. As it feeds on its own output, AI content farming will get thin and unreadable at any outfit that can’t access enough human-generated content to feed new fuel into the machines.

It’s possible that the Gurgavins and FakeBreakingNewsDomain.com’s of the world get replaced by bots, to the extent that the search engines who sell the bots as a service allow it…

…but they’ll never replace The Deep Dive. Thanks for reading.

Information for this story was found via company filings, and the sources mentioned. The author has no securities or affiliations related to the organizations discussed. Not a recommendation to buy or sell. Always do additional research and consult a professional before purchasing a security. The author holds no licenses.