OpenAI, the company behind the internet-breaking “human-like” language model ChatGPT, on Tuesday released a new tool that could help quell — or at least begin to — some educators’ concerns over the chatbot.

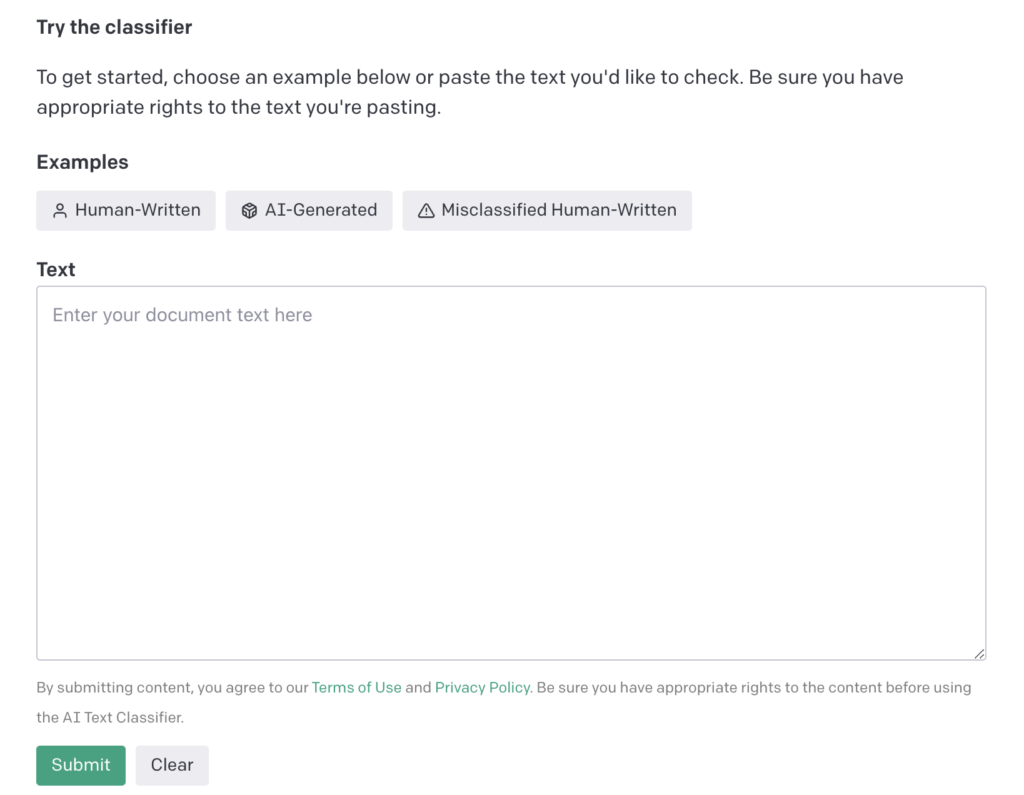

The new tool, called AI Text Classifier, is trained to tell if a piece of text is written by a human or by ChatGPT or other AIs from different providers. But OpenAI warns that it is not fully reliable. Reliably detecting AI-written text is impossible, according to the company, but the classifier exists to help “ inform mitigations for false claims that AI-generated text was written by a human.”

The examples it gives are 1) when AI-generated text is used to automate misinformation campaigns, 2) positioning an AI chatbot as human, and 3), which seems to be the most pressing concern lately: when AI tools are used for “academic dishonesty,” or simply, to cheat in school.

Since its public release in November, many students have utilized ChatGPT’s phenomenal writing abilities, raising the debate among educators on how far the still-free tool should be allowed into their classrooms, and many of these institutions have already started banning ChatGPT, while some educators have begun adapting their ways in response to the powerful language model.

READ: OpenAI’s ChatGPT Passes Medical Licensure, Wharton MBA Exams

The classifier, which is currently available to the public for testing and research, labels inputted text as either very unlikely, unlikely, unclear if it is, possibly, or likely AI-generated. But OpenAI emphasizes the many limitations, including how the model becomes “very unreliable” when the input is less than 1,000 characters.

The developer says that while the results may help in determining whether an AI tool was used to create the text, it should not be used as the sole piece of evidence. “The model is trained on human-written text from a variety of sources, which may not be representative of all kinds of human-written text.”

“We really don’t recommend taking this tool in isolation because we know that it can be wrong and will be wrong at times — much like using AI for any kind of assessment purposes,” Lama Ahmad, policy research director at OpenAI, told CNN. “We are emphasizing how important it is to keep a human in the loop … and that it’s just one data point among many others.”

The AI Text Classifier isn’t the first tool created to help prevent the use of tools like ChatGPT in academic dishonesty. Eduard Tuan, a student from Princeton, is also currently beta-testing his tool, ZeroGPT, and has claimed that he has received thousands of requests from educators.

OpenAI encourages educators and the general public to actively participate in improving the classifier by sharing their feedback. The company says that it will continue its discussions with institutions to help educate educators about the abilities and limitations of AI.

Information for this briefing was found via OpenAI, CNN, and the sources and companies mentioned. The author has no securities or affiliations related to this organization. Not a recommendation to buy or sell. Always do additional research and consult a professional before purchasing a security. The author holds no licenses.