If ChatGPT were a real person, they would have been a medical doctor with a master’s degree in business. But in reality, the OpenAI’s ubiquitous chatbot is doing that for students instead.

In a recent medical research study, it was found that ChatGPT “performed at or near the passing threshold” on the United States Medical Licensing Exam (USMLE), adding that it demonstrated a high level of concordance and insight in its explanations to the answers.

“These results suggest that large language models may have the potential to assist with medical education, and potentially, clinical decision-making,” the researchers’ wrote.

The paper was conducted by medical field researchers from California-based healthcare startup AnsibleHealth and the team from ChatGPT.

The USMLE is a comprehensive three-step standardized testing program covering all topics in physicians’ fund of knowledge, spanning basic science, clinical reasoning, medical management, and bioethics. It usually takes over six years for a student to progress from basic medical science to postgraduate medical education before completing the test with a passing grade.

Step 3 is taken by doctors who have finished 0.5 -1 yr of postgrad medical education.

— Noor Siddiqui (@noor_siddiqui_) January 22, 2023

and astonishingly chatGPT can pass today. AI doctor might be here faster than expected @Willyintheworld @dbsable @nealkhosla

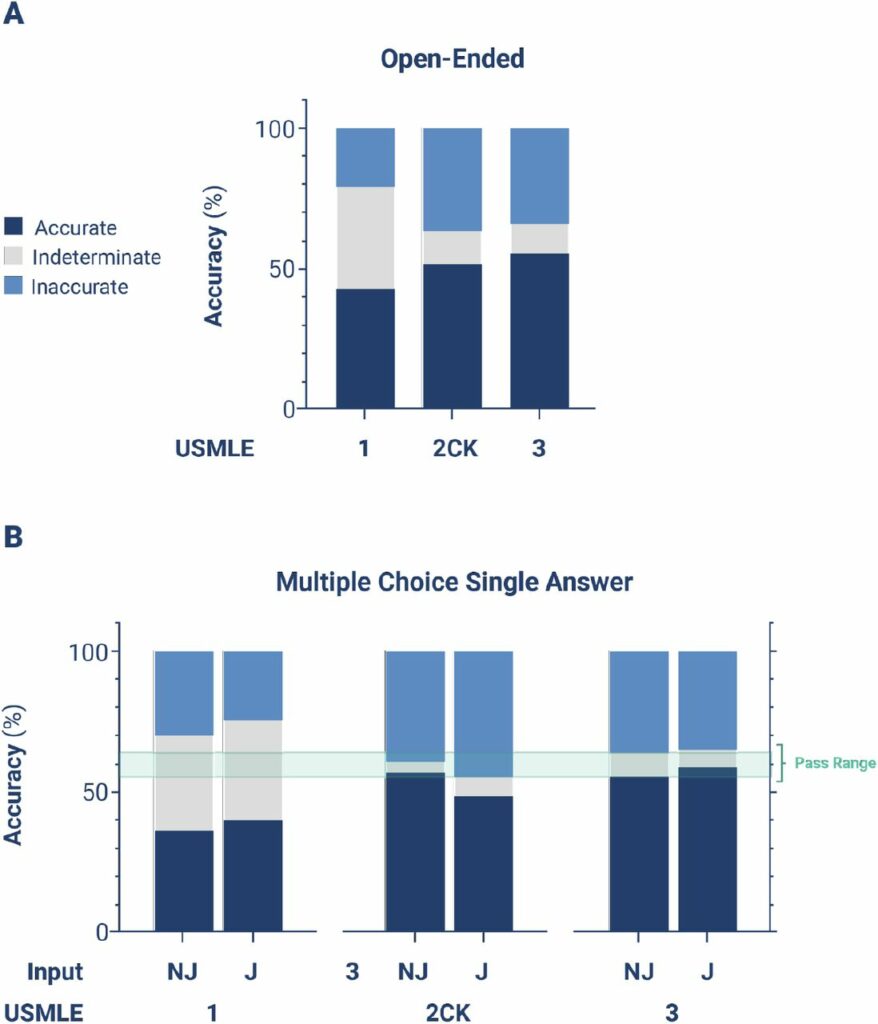

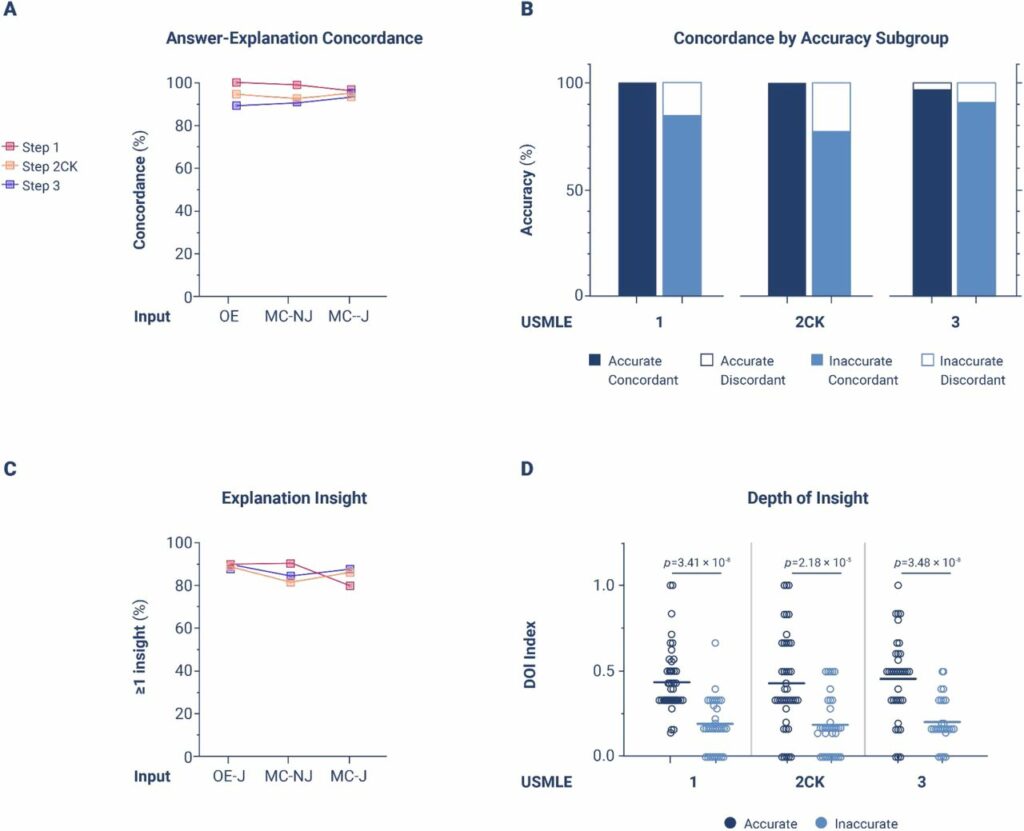

With indeterminate responses censored, ChatGPT had an accuracy rate of 68.0% for USMLE Step 1, 58.3% for Step 2CK, and 62.4% for Step 3 on the open-ended questions.

For multiple choice questions, ChatGPT scored an accuracy rate of 55.1%, 59.1%, and 60.9%, respectively. When pushed to provide a rationale for each answer choice, the chatbot’s accuracy rate on these types of questions was 62.3%, 51.9%, and 64.6%–all with indeterminate responses censored.

The researchers concluded that ChatGPT “yields moderate accuracy approaching passing performance on USMLE.”

A: Accuracy distribution for inputs encoded as open-ended questions

B: Accuracy distribution for inputs encoded as multiple choice single answer without (MC-NJ) or with forced justification (MC-J)

Source: Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models

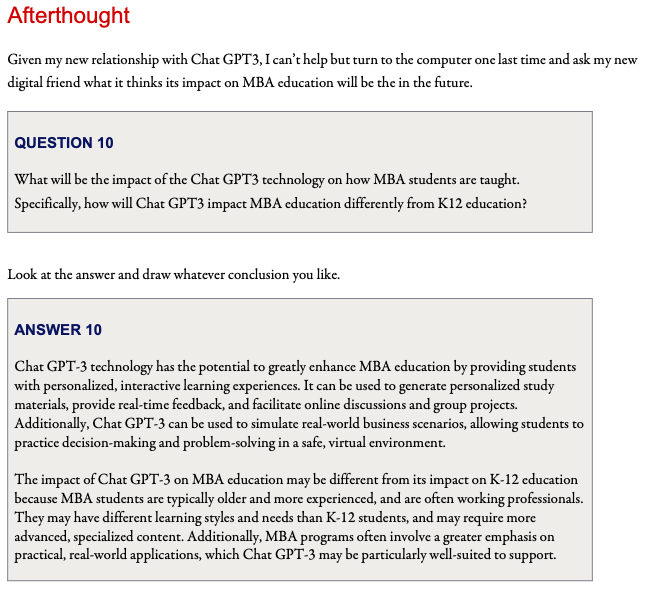

The chatbot is also said to have demonstrated “high internal concordance,” with its answers and explanations scoring 94.6% concordance across all questions as reviewed by two physician adjudicators.

ChatGPT also yielded insights from its answers that the researchers believe “may assist the human learner,” producing at least one significant insight in 88.9% of all responses.

A: Overall concordance across all exam types and question encoding formats

B: Concordance rates stratified between accurate vs inaccurate outputs, across all exam types and question encoding formats.

C: Overall insight prevalence, defined as proportion of outputs with ≥1 insight, across all exams for questions encoded in MC-J format

D: DOI stratified between accurate vs inaccurate outputs, across all exam types for questions encoded in MC-J format. Horizontal line indicates the mean.

Source: Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models

“Our findings can be organized into two major themes: (1) the rising accuracy of ChatGPT, which approaches or exceeds the passing threshold for USMLE; and (2) the potential for this AI to generate novel insights that can assist human learners in a medical education setting,” the researchers wrote.

ChatMBA

While the proponents of the research–which included the ChatGPT team–are seeing this as a positive use for the chatbot, a professor at the University of Pennsylvania’s Wharton School of Business is sounding the alarm as ChatGPT was able to pass the prestigious school’s final exam.

Professor Christian Terwiesch published a research paper in which he documented the performance of ChatGPT on the final exam of a typical MBA core course, Operations Management. He noted that the chatbot “would have received a B to B- grade on the exam” considering its performance.

In summarizing the chatbot’s performance on the MBA final exam, he first said that it “does an amazing job at basic operations management and process analysis questions.”

“Not only are the answers correct, but the explanations are excellent,” he added.

However, ChatGPT also “makes surprising mistakes in relatively simple calculations at the level of 6th grade Math.” He also said that the current version of the chatbot “is not capable of handling more advanced process analysis questions, even when they are based on fairly standard templates.”

Terwiesch also found out that ChatGPT “is remarkably good at modifying its answers in response to human hints… able to correct itself after receiving an appropriate hint from a human expert.”

“This has important implications for business school education, including the need for exam policies, curriculum design focusing on collaboration between human and AI, opportunities to simulate real world decision making processes, the need to teach creative problem solving, improved teaching productivity, and more,” the professor concluded.

Anthropic’s Claude AI, which is similar to OpenAI’s ChatGPT, has also earned a “marginal pass” on a blind graded law and economics test at George Mason University, according to professor Alex Tabarrok.

The professor believes the rival chatbot, which examiners described as “better than many human” candidates, is an improvement on the artificial intelligence built by OpenAI, though it still had significant flaws when compared to the best human students.

Tabarrok gave an example of an answer to a question about how intellectual property law could be improved. The Claude AI provided a 400-word response with five main points outlining potential improvements to IP laws, but it was reportedly lacking in clear reasoning.

“The weakness of the answer was that this was mostly opinion with just a touch of support,” Tabarrok said. “A better answer would have tied the opinion more clearly to economic reasoning. Still a credible response and better than many human responses.”

Most recently, ChatGPT was able to pass the Turing test–a rubric used to measure a machine’s capability of possessing human-like intelligence. The chatbot became the second one of its kind, after Google’s LaMDA AI, to pass such feat.

How did ChatGPT pass the Turing test? It accomplished this by convincing a panel of judges that it was a human. Natural language processing, dialogue management, and social skills were used to achieve this.

ChatGPT reportedly performed admirably in the test, able to converse with human evaluators and convincingly mimic human-like responses in a series of tests. In some cases, evaluators couldn’t tell the difference between ChatGPT’s responses and those of humans.

Although the student revealed it later, I did NOT notice anything wrong with the initial response. It was a good, intelligent seminar answer.

— Jon Agar (@jon_agar) December 6, 2022

This can now be done live and routinely in university seminar discussions

I’m not even sure it’s a bad thing

OpenAI, the research lab behind ChatGPT, is reportedly in talks to sell current shares in a tender offer worth roughly $29 billion, making it one of the most valuable US firms on paper but generating little revenue.

READ: OpenAI’s ChatGPT Reportedly In Talks For Tender Offer Putting Firm At $29 Billion Valuation

However, OpenAI is also marred with a blemish in its history. According to revelations made by Time magazine, the research lab used the labour of Kenyan workers to review sexually and violently explicit content in order to train the algorithm to avoid toxic language.

READ: OpenAI Exploited Low-Paid African Workers to Train ChatGPT’s AI System

Microsoft Corp. is also in advanced talks to expand its stake in OpenAI. Microsoft invested $1 billion in OpenAI in 2019 and became the company’s preferred partner for commercializing breakthrough technologies for services such as the search engine Bing and the design tool Microsoft Design.

Information for this briefing was found via Fortune, Metaverse Post, Independent, and the sources mentioned. The author has no securities or affiliations related to this organization. Not a recommendation to buy or sell. Always do additional research and consult a professional before purchasing a security. The author holds no licenses.